Of Fishbones and Philosophy

On the off chance that you, dear reader, are thinking that there is precious little overlap between the skeletons left over from dead fish and the high art of philosophy, let me set your mind at rest. You are correct; there isn’t much. Nonetheless, this installment isn’t a shortened quip-of-a-column designed to note this simple observation and then to make a quick, albeit graceful exit. In point of fact, the fishbones that I am referring to have a great deal to do with philosophy, in general, and epistemology, specifically.

For those you aren’t aware, the fishbone or Ishikawa diagram (after Kaoru Ishikawa) is a way of cataloging the possible, specific causes of an observed event as a way of inferring which one is the most likely. Its primary application is to those events where the effect is clearly and obviously identifiable but where the trigger of that event is unknown or, at least, unobservable. One can usually find these diagrams applied in industrial or technological settings where a fault in a complex system rears its ugly head but the failure mode is totally or partially unknown.

Now it is one of those trendy nuggets of common knowledge that philosophy is one of those subjects designed for the technically-challenged to while away their time considering how many angels can dance on the head of a pin or whether to push the fat man onto the tracks in order to save the lives of the many passengers on the train. No practical applications can be found in philosophy. It has nothing important to offer workplaces where holes are drilled, sheet metal bent, circuits soldered, products built, and so on.

The fishbone diagram speaks otherwise – it deals with what is real and practical and with what we know and how we know it in a practical setting. It marries concepts of ontology and, more importantly, epistemology with the seemingly humdrum worlds of quality assurance and manufacturing.

To appreciate exactly how this odd marriage is affected, let’s first start with a distinction that is made in fishbone analysis between the proximate cause and the root cause. A practical example will serve much better here than any amount of abstract generalizations.

Suppose that as we are strolling through ancient Athens, we stumble upon a dead body. We recognize that it is our sometime companion by the name of Socrates. Having been fond of that abrasive gadfly and possessing a slice of curiosity consistent with being an ancient Greek, we start trying to determine just what killed Socrates. One of us, who works in the new Athenian pottery plant where the emerging science of quality management is practiced, recommends making a fishbone diagram to help organize our investigation.

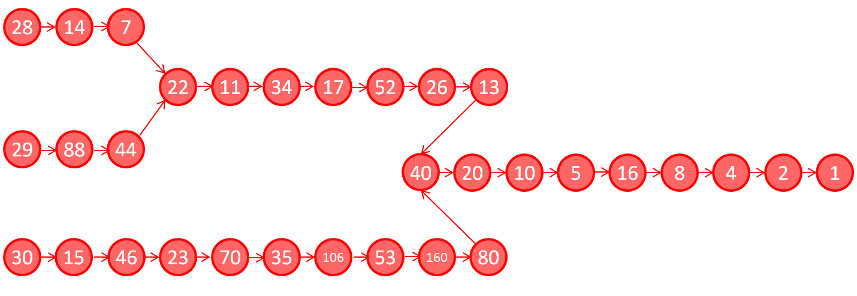

Inside the head of the fish we place the key observation that Socrates is dead. Off the central spine, we string possible causes of death, grouped into categories that make sense to us. After a lot of discussion, we agree these four: Divine Intervention, Natural Causes, Accidental Death, and Foul Play. Under each of these broad headings we add specific instances. For example, some of us have heard rumors of the dead man’s impiety, so perhaps Zeus has struck him down with a thunderbolt. Other suggest that being hit with a discus was the cause of death, just like what happened to uncle Telemachus at the last Olympic Games. We continue on until we have our finished fishbone.

This version of the fishbone diagram aims at helping us determine the proximate cause. We want to know what actually killed him without, at this stage, trying to figure out why (although the question of ‘why’ helped us in populating the list).

We then, in good logical fashion, start looking for observations that either strengthen or weaken each of the bones in our diagram. We find no evidence of charring or submergence in water, so we argue that Divine Intervention is highly unlikely. There is no blood or signs of blunt force trauma, so scratch all the possibilities under Accidental Death. One of us notes that his belongings are all present and that his face is peaceful and his body shows no subtle signs of violence like what might be attributed to strangulation or smothering, so we think murder very unlikely. Finally, one of us detects a faint whiff of a distinct odor and concludes that Socrates has died by drinking hemlock.

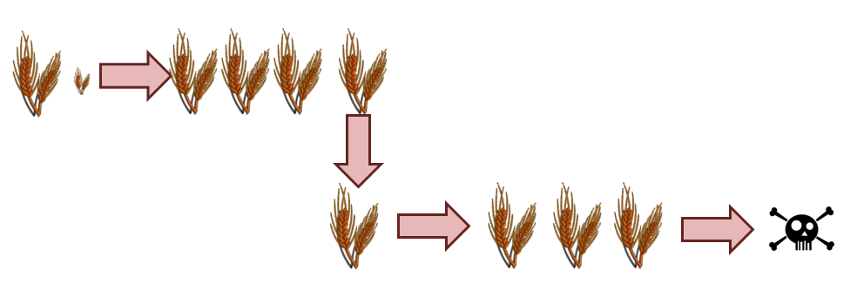

In fishbone analysis, hemlock poisoning is the proximate cause – the direct, previous link in the chain of causation that led to his death. Note that we haven’t actually seen Socrates consume the lethal cocktail; we are simply inferring it based on the effect (he’s dead) and the smell (likeliest cause). The next step is to determine the root cause – the reason or motivation for his consumption of the hemlock.

We find, after collecting a different type of observations, that he was executed by the Polis of Athens for impiety and for corrupting the morals of the youths of our city state. We generally fill out this step by interviewing people and collecting human impressions rather than physical evidence. A what point we decide that we’ve hit the root is up to us. We can stop with the death sentence passed down by the Athenian court or we can look to the politics that led to that sentence. We can stop with the politics or dig further into the social and demographic forces that led to Athenian democracy so disposed to dispatch the father of Western thought. We can trace events back to Hippias the tyrant, or back to Homer, or wherever.

This sense of arbitrariness isn’t confined solely to where we cut off the determination of the root cause. We also limited our universe of explanations in determining the proximate cause. We can’t consider everything – how about dryads, sylphs, and satyrs?

In other words, all of us start our fishbone analysis with a Bayesian a priori expectation of likeliest causes and we apply, whether consciously or not, Occam’s razor to simplify. Let’s reflect on this point a bit more. Doing so brings into sharper focus the distinction between what we think we know, what we actually know, and what we don’t know; between the universe of knowable, unknown, and unknowable. Ultimately, what we are dealing with is deep questions of epistemology masquerading as crime scene investigation.

The situation is even more interesting when one has an observable effect with no discernable cause. Is the cause simply unknown or is it unknowable? And how do we know in which category it goes without knowing it in the first place?

This epistemological division is even further muddied when we deal with indirect observations provide by tools (usually computers). Consider the case where a remote machine (perhaps in orbit) communicates with another machine, which unpacks the electronic signals it receives. If a problem is observed (a part is reported dead, for example), what does this actually mean? Where does the fault lie? Is it in the first machine or the second one? Could the second one be spoofing by accident or malice (hacking) the fault on the first. How does one know and where does one start? And if one is willing to extend the concept of a second machine to include human beings and their senses then the line gets even more blurred between observer and observed. Where does the fault lie, with our machines or with ourselves, and how does one know?

I will close on that note of uncertainty and confusion with an aporetic ending in honor of Socrates. And all of it came from a little fishbone, whose most common practitioners would most likely tell you that they are not interested in anything so impractical as philosophy.