Self-Reference and Paradoxes

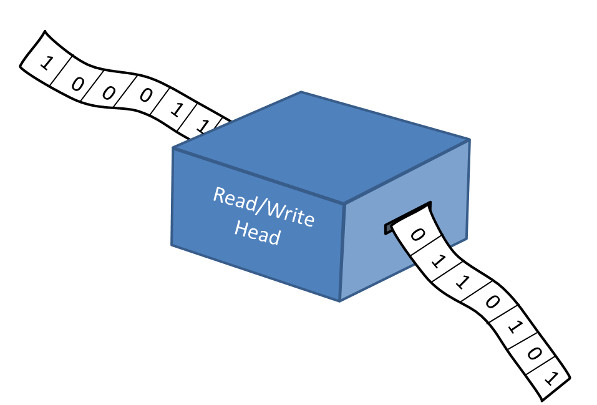

The essence of the Gödel idea is to encode not just the facts but also the ‘facts about the facts’ of the formal system being examined within the framework of the system being examined. This meta-mathematics technique allowed Gödel to prove simple facts like ‘2 + 2 = 4’ and hard facts like ‘not all true statements are axioms or are theorems – some are simply out of reach of the formal system to prove’ within the context of the system itself. The hard facts come from the system talking about or referring to itself with its own language.

As astonishing as Godel’s theorem is, the concept of paradoxes within self-referential systems is actually a very common experience in natural language. All of us have played at one time or another with odd sentences like ‘This sentence is false!’. Examined from a strictly mechanical and logical vantage, how should that sentence be parsed? If the sentence is true then it is lying to us. If it is false, then it is sweetly and innocently telling us the truth. This example of the liar’s paradox has been known since antiquity and variation of it have appeared throughout the ages in stories of all sorts.

Perhaps the most famous example comes from the original Star Trek television series in an episode entitled ‘I Mudd’. In this installment of the ongoing adventures of the starship Enterprise, an impish Captain Kirk defeats a colony of androids that hold him and his crew hostage by exploiting their inability to be meta.

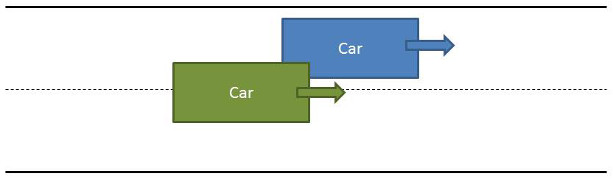

There are actually host of paradoxes (or antinomies in the technical speak) that some dwerping around on the internet can uncover in just a handful of clicks. They all arise when a formal system talks about itself in its own language and often their paradoxical nature arises when they talk about something of a negative nature. The sentence ‘This sentence is true,’ is fine while ‘This sentence is false.’ is not.

Not all of the examples show up as either interesting but useless tricks of the spoken language or as formal encodings in mathematical logic. One of the most interesting cases deals with libraries of either the brick and mortar variety or existing solely on hard drives and in RAM and FTP packets.

Consider for a moment that you’ve been given charge of a library. Properly speaking, a library has two basic components: the books to read and a system to catalog and locate the books so that they can be read. Now thinking about the books is no problem. They are the atoms of the system and so can be examined separately or in groups or classes. It is reasonable and natural to talk about a single book like ‘Moby Dick’ and to catalog this book along with all the other separate works that the library contains. It is also reasonable and natural to talk about all books written by Herman Melville and to catalog them within a new list with a title perhaps with the name ‘Lists of works by H. Melville’. A similar list can be made with grouping criterion selects books about the books by Melville. This list would have a title like ‘List of critiques and reviews of the works by H. Melville’.

An obvious extension would be to construct something like the following list.

- List of critiques and reviews of H. Melville

- List of critiques and reviews of J. R. R. Tolkien

- List of critiques and reviews of U. Eco

- List of critiques and reviews of R. Stout

- List of critiques and reviews of G. K. Chesterton

- List of critiques and reviews of A. Christie

- ….

Since the lists are themselves written works what status do they have in the cataloging system? Should there also be lists of lists? If so, how deep should there construction go? At some point won’t we arrive at lists that have to refer to themselves and what do we do when we reach that point? Should the library catalog have a reference to itself as a written work?

Bertrand Russell wrestled with these questions in the context of set theory around the turn of the 20th century. To continue on with the library example, Russell would label the ‘List of Author Critiques and Reviews’ as a normal set since it is a collection of things that doesn’t include itself. He would also label as an abnormal set, any list that would have itself as a member – in this case a catalog (i.e. list) of all lists pertaining to the library. General feeling suggests that the normal sets are well behaved but the abnormal sets are likely to cause problems. So let’s just focus on the normal sets. Russell asks the following question about the normal sets: Is the set, R, of all normal sets, itself normal or abnormal? If R is normal, then it must appear as a member in its own listing, thus making R abnormal. Alternatively, if R is abnormal, it can’t be listed as a member within itself and, therefore, it must be normal. No matter which way you start you are led to a contradiction.

The natural tendency is, at this point, to cry foul and to suggest that the whole thing is being drawn out to an absurd length. Short and simple answers to each of the questions posed in the earlier paragraph come to mind with the application of a little common sense. Lists should only be themselves cataloged if they are independent works that are distinct parts of the library. The overall library catalog need not list itself because it primary function is to help the patron find all the other books, publications, and related works in the library. If the patron can find the catalog, then there is no need to have it listed within itself. One the other hand, if the patron cannot find the catalog, having it listed within itself serves no purpose – the patron will need something else to point him towards the catalog.

And as far as Russell and perfidious paradox is concerned, who cares? This might be a matter to worry about if one is a stuffy logician who can’t get a date on a Saturday night but normal people (does this mean Russell and his kind are abnormal?) have better things to do with their lives than worry about such ridiculous ideas.

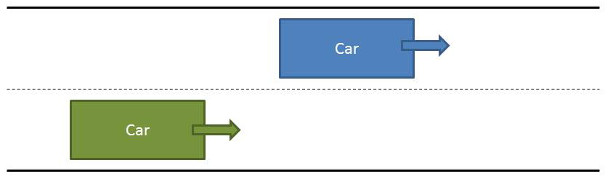

Despite these responses, or maybe because of them, we should care. Application of common sense is actually quite sophisticated even if we are quite unaware of the subtleties involved. In all of these common-sensical responses there is an implicit assumption about something above or outside. If the patron can’t find the library catalog, well then that is what a librarian is for – to point the way to the catalog. The librarian doesn’t need to be referred to or listed in the catalog. He sits outside the system and can act as an entry point into the system. If there is a paradox in set theory, not to worry, there are more important things than complete consistency in formal systems.

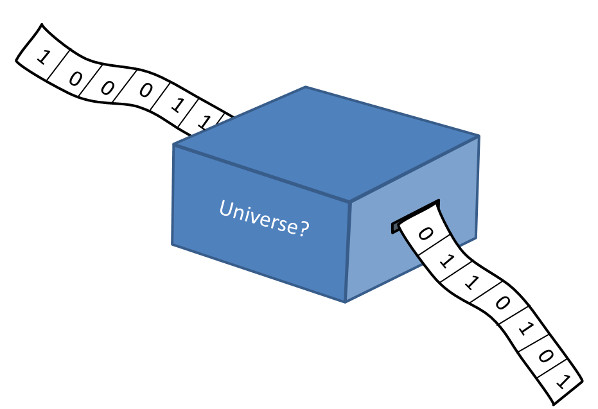

This is concept of sitting outside the system, is at the heart of the current differences between human intelligence and machine intelligence. The later, codified by the formal rules of logic, can’t resolve these kinds of paradoxes precisely because they can’t step outside themselves like people can. And maybe they never will.