Logic and Cause & Effect

There is a famous scene in the movie All the President’s Men where Bob Woodward (played by Robert Redford) and Carl Bernstein (played by Dustin Hoffman) are struggling to see if they have enough facts to continue to publish their stories about Watergate. As they are eating at a local fast food joint they discuss what they can ‘deduce’ from what they already know. Woodward essentially says that they can infer what they need circumstantially. He defends this position with a discussion about logic and cause and effect.

In essence, what he says is that if you look out your window before you go to bed one fine, cold winter night and the ground is snow-free and you wake up the next morning to see a winter wonderland of white all around you can conclude that it snowed overnight, even if you didn’t see it. Simple application of cause and effect, it seems. But arguing for causes by observing effects can be tricky since causes are often elusive even though their effects are quite observable.

Consider the basic demonstration of gravity. Hold an object up and the let go. The effect is clearly observable; the object falls to the ground. The cause, on the other hand is quite mysterious. Just what is gravity? None of us can feel it or see it or sense it in any way. The word gravity is just a name we give to this cause. The genius of Newton gave us a way to match the nature of the effect (distance and speed as a function of time) to the geometry of the situation (where the object is in relation to the Earth) but he didn’t really explain it. Neither do the modern explanations of gravity as spacetime bending or the exchange of gravitons do anything other than to explain one mysterious concept in terms of others (which, granted, provide a more complete way of predicting the outcome).

The situation becomes substantially more complex when several causes can be present, all of which result in a particular effect. It’s no wonder that misapplications of cause and effect abound even outside the realms of science. Sometimes this leads to amusingly bad arguments; sometimes the results are more frustrating and thorny.

At the heart of the logic of cause and effect is the idea that if a cause, call it $P$, is present, then its effect, call it $Q$, must follow. Symbolically, this linkage is denoted by $P \rightarrow Q$, which is the familiar construct of propositional logic that was discussed in this column in earlier postings.

The question is what can be said about $P$ based on an observation of $Q$ or vice versa. The four options are:

- Observe P and infer something about Q

- Fail to observe P and infer something about Q

- Fail to observe Q and infer something about P

- Observe Q and infer something about P

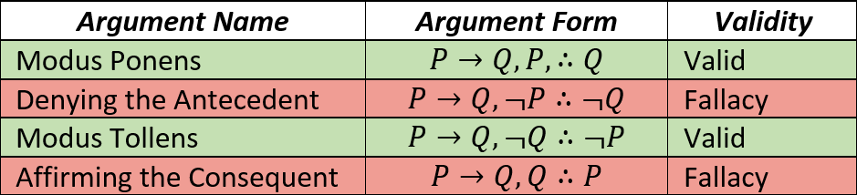

The usual names for these options and their logical validity are summarized symbolically in the following table.

This innocent looking table can lull one into thinking that good arguments are easily sifted from the bad ones and this expectation would be correct – for simple examples. Bo Bennett’s website, Logically Fallacious, has hundreds of examples of all sorts of fallacies both formal and informal. These have been specially crafted to make detection of the fallacy nice and easy. Often they are also fun to read and some are laugh-out-loud funny.

I don’t have nearly the same devotion to cataloging fallacies nor do I have the same sense of humor as Dr. Bennett, so I will stick first to some easy examples of the meteorological type with which we began above. I’ll then look at just where things go awry and I’ll emphasize that, for things to move forward, we often have to ‘embrace the fallacies’, specifically affirming the consequent.

Suppose that you look out the window and you see that it is raining. Literally, you are seeing water come from the sky and hit the earth. Furthermore, you can readily see that when the water strikes it wets the ground. Through observation, you’ve determined the causal link that ‘if it is raining then the ground is wet’. The clauses ‘it is raining’ and ‘the ground is wet’ abbreviate to $P$ and $Q$, respectively.

With this fact established, you no longer need to directly observe the ground during rainfall to know it is wet. If someone else, say a morning weather report, tells you that “it is currently raining in the metropolitan area” then you can immediately deduce that the ground is wet. This valid deduction is the content of Modus Ponens.

But what can you do with other observations? That is the key point since often, as argued above, observing the cause is very difficult (more on this in a bit).

Well, Modus Tollens tells us that if we were to observe that the ground is dry then we can immediately deduce that it is not raining. I personally use this ‘trick’ whenever the day is hazy or foggy and the rain, if there is any, is too fine to be seen or heard. If the ground looks dry then it isn’t raining.

However, if the ground is wet I am stuck and my deductive chain fails. The reason for this is obviously the fact that the ground could be wet for a bunch of other reasons. Maybe it snowed overnight but melted before I made my observation. More probably, my neighbors may have watered their lawns in the early morning hours with those automatic, beneath-the-ground systems that have proved most popular in my neighborhood. Observing that the ground is wet (Affirming the Consequent) merely tells me that one of several possibilities was the cause.

This same line of argument also leads us to reject the conclusion that the ground is not wet when I observe that it is not raining (Denying the Antecedent). Again, several causes can lead to the same effect so the absence of a single cause does not support the conclusion that the effect is also absent.

Now, all of this sounds neat and tidy but the world is never so clear cut. Linkage between cause and effect is difficult to observe completely and, in many cases, the cause can’t be observed directly. For example, if I observe that the ground is wet but I can’t see it raining, I may still be able to conclude that it is even though I would be committing the formal error of Affirming the Consequent. I would do this in a probabilistic fashion where I would argue that the other causes are very unlikely. Perhaps the street in front of my house is wet in a way that would be unlikely due to water sprinklers alone. Or maybe it is summer and the likelihood of snow is small. As compelling as these arguments may be, a water main break out of my line of sight may explain everything.

Despite the fact that this rainfall example is contrived, it captures the essence of the natural sciences. Medicine, in particular, is plagued by this kind of uncertainty. A patient comes in with an effect, say a fever, and the cause is unknown. Literally hundreds of causes exist and one of the tasks set before the doctor is to infer the likeliest cause and start there. Each and every day, medical practitioners violate Affirming the Consequent – they need to, since inaction is often far more dangerous than action based on a wrong conclusion. The key is not making the inference but rather having the wisdom to know when to abandon that inference and to make a new one.

This type of statistical reasoning is not limited to the STEM professions. Each of us deals with unseen or poorly observed causes and effects that may have many parents each and every day. Each of us must embrace formally fallacious reasoning just to be able to move forward.

So, the next time you see someone employ one of these fallacies in a manner obvious to you and are inclined to reject their argument just remember two things. First he may not be able to see cause and effect as clearly as you do. Second, perhaps he knows something you don’t and it is you who are not seeing cause and effect clearly. In either case, certainly reject abject certainty in the conclusion but try to have compassion for the arguer. After all, making conclusions about cause and effect, even the cause and effect of a bad argument, is a difficult job.