What is Random

Call it happenstance or blind luck but we all have a notional idea of randomness and the role it plays in our lives. We see learned authorities invoke random processes in Darwin’s theory of natural selection, in the vicissitudes of the Stock Market, or in the efficacy of a pharmaceutical in treating an ailment or disease. Surprisingly, a precise definition of random is often elusive and controversial. Despite the very nature of randomness, this post will try to proceed deterministically through some of the discussions over the millennia starting with the bigger questions and ending with the smaller ones. Interestingly, there are deep connections between the smallest and largest questions that often sneak up.

At the top of the list is the very question about free will versus determinism. If free will exists then random outcomes are possible but, conversely, if the universe’s evolution is predetermined then every outcome is part of a larger plan and only our own (predetermined) ignorance prevents us from perceiving it. Much of the thinking here falls under the problem of future contingents. One of the first philosophical scenarios used to explore future contingencies was Aristotle’s example of the sea battle

that may or may not be fought tomorrow. Assume, for a moment, that the proposition ‘the sea battle will be fought tomorrow’ is unequivocally true. Next, let’s turn our face from the future and consider the past. If the proposition ‘the sea battle will be fought tomorrow’ is true today, then it was also true yesterday because the proposition’s truth value in the past was also resolved and locked in. If it was true yesterday then it must also have been true the day before and so on. The same holds if the proposition ‘the sea battle will not be fought tomorrow’ is true. The actual proposition is not at all important but rather that the truth value of a given future event is certain. At this point we are forced to note that if a future event has a well-defined truth value then free will and/or randomness cannot be allowed. Free will of the combatants would afford them the ability to cancel the battle while a random event, say an unforeseen terrible storm, may arise and prevent one navy from arriving.

To get around the problem that a future proposition must have a definite state of true or false like current propositions do, Aristotle created a third truth value for future events that regards them neither true not false but being contingent. This is the only violation of the law of the excluded middle that Aristotle allows. Philosophers seem to love to argue about this (e.g., see the introduction to The Problem of Future Contingents by Richard Taylor, originally published in The Philosophical Review, 66 (1957), now accessibly reprinted in Philosophy for the 21st Century: A Comprehensive Reader, edited by Steven M. Cahn).

The interesting thing is that while philosophers argue over how well Aristotle’s argument addresses the problem of future contingents and all the concepts that flow from it (particularly randomness), mathematicians, engineers, and scientists have all assumed that the randomness is inherent in reality. For example, in a recent paper on Bell’s Theorem and random numbers, Pironio et al start by saying “Randomness is a fundamental feature of nature…” already assuming, as a given, an ontological position still wrestled over by philosophers.

Of course, the very roots of probability theory date back to the 17th century and often centered on characterizing the randomness seen in games of chance. Indeed, it is hard to find any general text on the subject without encountering a coin flip, a dice roll, or the standard deck of cards. The concept of the random variable doesn’t seem to have taken clear shape until the 19th century when the ideas of probability and expectation value were systematically expressed. It isn’t easy to get a clear and firm history on the flow of ideas in this field and nailing down who contributed what and when is a task best left to others.

The subject then jumped into overdrive in the 20th century with ideas being applied to so many things it is hard to keep track. The importance of randomness and decision making in the face of uncertainty became central focal points in both economics, control theory, and artificial intelligence. A variety of distributions were invented and continue to be invented for understanding experimental data, for actuarial sciences and the setting of premiums in insurance, and for predicting the reliability and lifetimes of manufactured goods. The concepts algorithmic complexity/randomness and random signals grew in the fields of computer science and electronics. The field of dynamical systems changed how we look at random behavior in systems that exhibit chaos. Perhaps, the single most influential development was the theory of quantum mechanics, which is completely deterministic in it predictions right up to but not including measurement at which point randomness and probability come straight to the front.

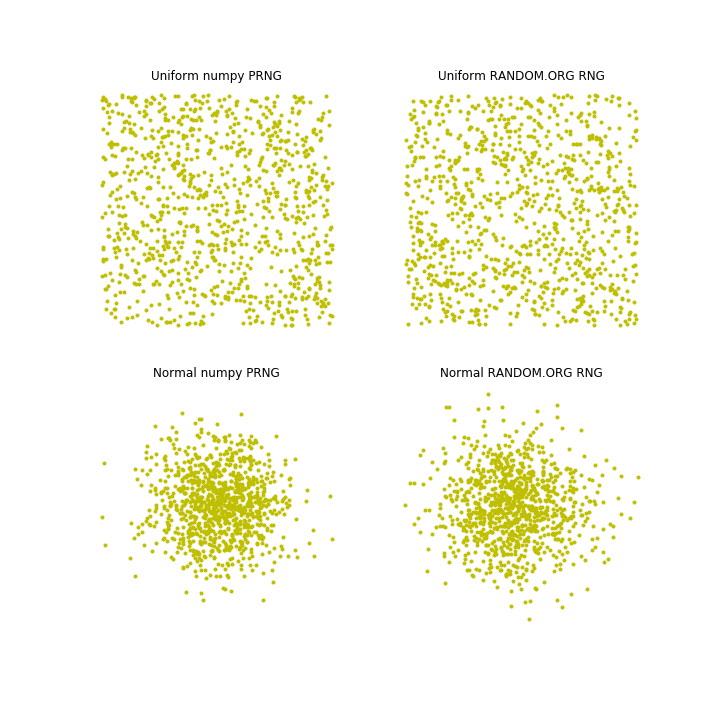

All that being said, one of the more interesting corners of the modern aspects of randomness is the generation of sequences of random numbers for a variety of computer applications. Psuedo-random number generators (PRNG) typically deliver the sequences used in simulations of what we believe to be random effects in nature (usually under some guise of the Monte Carlo method). However, anyone who has used PRNGs professionally soon comes to suspect their performance in large-scale simulations since they are really periodic algorithms whose short-term manifestation ‘looks’ random. In more recent years, rRANDOM.ORG has been producing random numbers based on what many believe are ontologically random events in nature (in this case atmospheric noise). The figure below shows a comparison between a set of uniform and normally distributed random numbers (top and bottom, respectively) produced in numpy and generated by random.org (left and right, respectively).

To the naked eye they look similar. Both systems produce ‘open spot’ and ‘clumps’ just as one would expect from a random process. But the gap between looking good and being good is wide and crossing it is difficult. RANDOM.ORG’s page on randomness or the Wikipedia article on tests for randomness detail the various struggles constructing adequate tests but the long and short of it is that the difficulty lies in the fact that after millennia of talking about chance versus fate we still know far too little about how the world works.