A Flip of the Coin

Ideological conflicts are often the bitterest of arguments that appear in the race of Man. Whether the religious wars of a post-reformation Europe, the polarizing arguments of modern US politics, the simple disputes over which is better – PCs or Macs, or whether somebody should be a dog-person or a cat-person, these conflicts are always passionate and, while important, they are, in some aspect, pointless. Clearly, adherents of both sides always have a good reason for supporting their position; if they didn’t they wouldn’t support it so vehemently. Those bystanders without a strong opinion one way or another are left to just shake their heads.

One such ideological conflict is the strong, often mean-spirited, argument between the Frequentists and the Bayesians over the right way to characterize or define probabilities. For much of this particular cultural hotspot, I’ve been a bystander. By training and early practice, an outsider would have characterized me as a Frequentist since I am comfortable with and enjoy using sampling techniques, like classical Monte Carlo, to investigate the evolution of a given probability distribution. Over the past 6 or 7 years, I’ve come to a better appreciation of Bayesian methods and find myself in the ever-growing position of seeing the complementary utility of both.

Nonetheless, finding a simple scenario that captures the essential aspects of these schools of thought and is easy to articulate has been elusive – that is until recently. I now believe I’ve found a reasonably compact and understandable way to demonstrate the complementary nature of these techniques through the flip of a coin. (Although I am quite sure that there is great room to improve it across the board).

Before diving into the coin flip model, I would like to summarize the differences between the Frequentist and Bayesian camps. While the coin-flip model is strictly my own – based on my own thoughts and observations – the following summary is heavily influenced by the online book entitled Entropic Inference and the Foundations of Physics, by Ariel Caticha.

The reader may ask why people just can’t agree on a single definition of probability. Why do the two camps even matter.

On the notion of probability, Caticha has this to say

The Frequentist model of probability is based on what I call the gambling notion. Take a probability-based scenario, like throwing a pair of dice, and repeat for a huge number of trials. The probability of a random event occurring, say the rolling of snake eyes (two 1’s)

is empirically determined by how frequently it shows up compared to the total number of trials. The advantage that this method has is that, when applicable, it has a well-defined operational procedure that doesn’t depend on human judgement. It is seen as an objective way of assigning probabilities. It suffers in two regards. First, the notion of what random means is a poorly-defined philosophical concept and different definitions lead to different interpretations. Second, the method fails entirely in those circumstances where trials cannot be practically repeated. This failure manifests itself in two quite distinct ways. First, the question being asked could be entirely ill-suited to repeated trials. For example, Caticha cites the probability of there being life on Mars as one such question. Such a question drives allocation of resources in space programs around the world but is not subject to the creation and analysis of an ensemble of trials. Second, the scenario may naturally lend itself to experimental trials but the cost of such trials may be prohibitively expensive. In these cases, it is common to build a computational model which is subject to a classical Monte Carlo method in which initially assumed distributions are mapped to final distributions by the action of the trial. Both the mechanics of the trial and the assumed initial distribution are subjective and so are the results obtained.

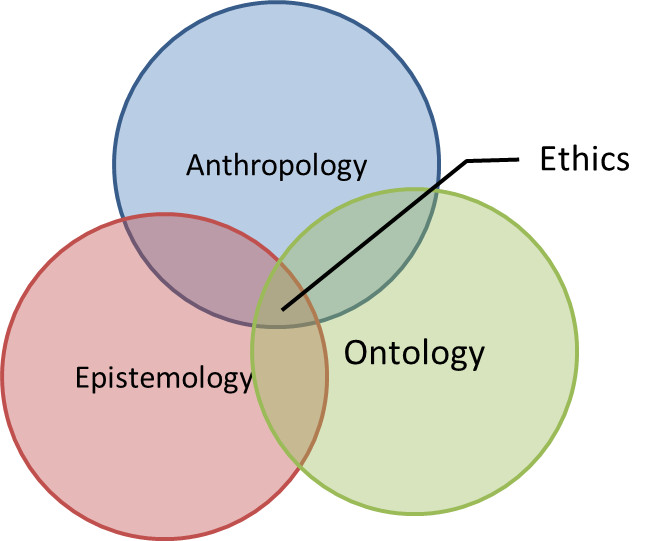

The Bayesian approach relies heavily on Bayes theorem and, in doing so, allows the user to argue consistently about issues like the life-on-Mars question without needing to define random or determine how to implement trials. This approach eschews the notion of objective determination of probability and, instead, views probability as an epistemological question about knowledge and confidence. Thus two observers can look at the same scenario and quite naturally and honestly assign very different probabilities to the outcome based on each one’s judgement. The drawback of this method is that it is much harder to reach a consensus in a group setting.

Now to the coin-flip model that embodies both points of view. The basic notion is this: Suppose you and I meet in the street and decide to have lunch. We can’t agree on where to go and decide to pick the restaurant based on a coin flip done in the following fashion. I’ll flip a quarter and catch it against the back of my hand. If you call the outcome correctly we go to your choice; alternatively we go to mine. What is the probability that we end up in your restaurant versus mine?

Well there are actually two aspects of this problem. The first is the frequentist assignment of probability to the coin flip. Having observed lots of coin flips, we both can agree that prior to the flip, the probability that it be heads or tails is 50-50.

But after I’ve flipped the coin and caught it, the probability of it being heads is a meaningless question. It is either heads or tails – it is entirely deterministic. It is just that you don’t know what the outcome is. So the probability of you picking the right answer is not amenable to trials. Sure we could repeat this little ritual every time we go out to lunch, but it won’t be an identical trial. Your selection of heads versus tails will be informed based on all your previous attempts, how you are feeling that day, and so on.

So it seems that we need both concepts. And after all, we are human and can actually entertain multiple points of view on any given subject, provided we are able to get past our own ideology.