Gödel’s Theorem – General Notions

As I mentioned in last week’s blog, I was encouraged by Casti’s chapter on the Halting Theorem and questions of undecidability in logic and computing. In fact, I was inspired enough that I resolved have another go at studying Gödel’s theorem.

To give some background, many years ago I came across ‘Gödel, Escher, and Bach: An Eternal Golden Braid’ by Douglas Hofstadter. While I appreciate that his work is considered a classic, I found it difficult and ponderous. Its over 700 pages of popularized work did little to nothing to really sink home Gödel’s theorem and the connections to Turing and Church. What’s more, Hofstadter seems to say (it’s difficult to tell exactly as he mixes and muddles many concepts) that Gödel’s work supports his ideas that consciousness can emerge from purely mechanistic means at the lowest level. This point always seemed dodgy to me. Especially since Hofstadter left me with the impression that Gödel’s theorem showed a fundamental lack in basic logic not an emergent property.

For this go around, I decided that smaller was better and picked up the slim work entitled ‘Gödel’s Theorem’ by Nagel and Newman. What a difference a serious exposition makes. Nagel and Newman present all the essential flavor and some of the machinery that Gödel used in a mere 102 pages.

Note that formal logic is not one of my strong suits and the intellectual terrain was rocky and difficult. Nonetheless, Nagel and Newman’s basic program was laid out well and consisted of the following points.

To start, the whole thing was initiated by David Hilbert, whose Second Problem, challenged the mathematical community to provide absolute proof of the consistency of a formal system (specifically arithmetic) based solely on its structure. This idea of absolute proof stands in contrast to a relative proof where the system is question is put into relation to another system, whose validity and consistency is accepted. If the relation between the two is faithful, then the consistency of the second system carries over or is imparted to the first.

Hilbert was unsatisfied by the relative approach as it depended on some system being accepted at face value as being consistent and finding such a system was a tricky proposition.

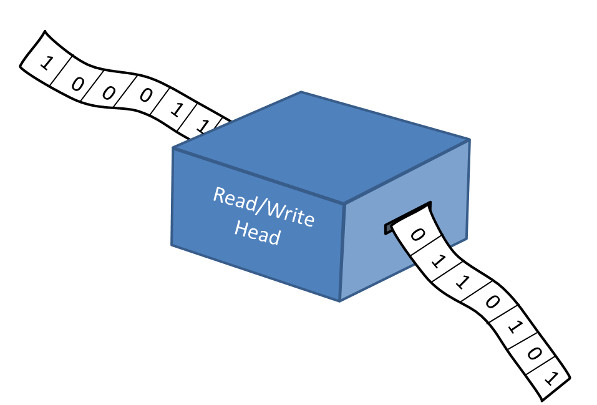

The preferred way to implement an absolute proof is to start by stripping away all meaning from the system and to deal only with abstract symbols and a mechanistic way of manipulating these symbols using well-defined rules. The example that Nagel and Newman present is the sentential calculus slightly adapted from the ‘Principia Mathematica’ by Whitehead and Russell. The codification of the formal logic system depends on symbols that fall into two classes: variables and constant signs. Variables, denoted by letters, stand for statements. For example, ‘p’ could stand for ‘all punters kick the football far’. There are six constant signs with the mapping

| ~ | Not |

| $\vee$ | Or |

| $\supset$ | If… Then… |

| $\cdot$ | And |

| ( | Left-hand punctuation |

| ) | Right-hand punctuation |

The idea is then to map all content-laden statements, like ‘If either John or Tim are late then we will miss the bus!’, to formal statements like ( (J $\vee$ T ) $\supset$ B) with all meaning and additional fluff removed. Two rules for manipulating the symbols, the Rule of Substitution and the Rule of Detachment, are adopted and four axioms are used as starting points.

In using this system, one has to sharpen one’s thinking to be able to distinguish between statements in the system (mathematical statements like ‘2 > 1’) from statements about the system (meta-mathematical statements like ‘2>1’ is true or that the ‘>’ symbol is an infix operator). One must also be careful to note the subtle differences between the symbol ‘0’, the number 0, and the concept of zero meaning nothing.

The advantage of this approach is that the proofs are cleaner, especially when there are many symbols. The disadvantage is that it takes time and effort to be able to work in this language.

The consistency of the formal system is shown when there is at least one formal statement (or formula) that cannot be derived from the axioms. The reason for this is complicated and I don’t have a good grasp on it but it goes something like this. The following formula ‘p $\supset$ (~ p $\supset$ q)’ can be derived in the sentential calculus. If the statements S and ~S are both deducible then any statement you like can be derived from the axioms (via the Rules of Substitution and Detachment) and the system is clearly inconsistent. In the words of Nagel and Newman:

For the sentential calculus, they point out that the formula ‘p $\vee$ q’ fits the bill since it is a formula, it doesn’t follow from the axiom. Thus this system is consistent. Note that there is no truth statement attached to this formula. The observation simply means that ‘p $\vee$ q’ can’t be obtained from the axioms by a mechanical manipulation. They present this argument in their chapter titled ‘An Example of a Successful Absolute Proof of Consistency’.

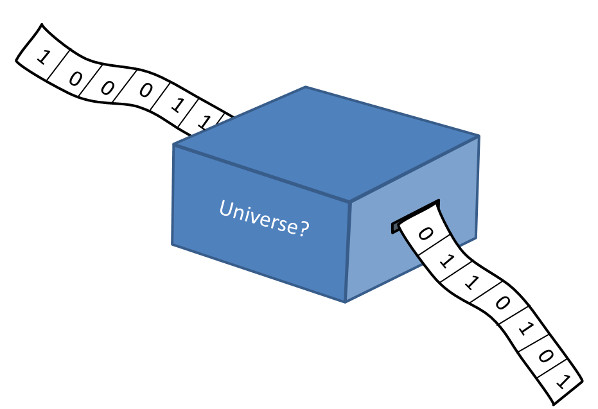

Well despite that lofty achievement, they go on to show how Gödel took a similar approach and mostly ended any hope for a proof of absolute consistency in formal systems with a great deal more complexity. Gödel used as his symbols the numerals and as his rules the basic operations of arithmetic and, in particular, the arithmetic of prime numbers. Using an ingenious mapping from of the variables and constant symbols to numbers, he not only could encode the structure of the formal system itself, he could also encode statements about the formal system as well (meta-mathematics). In the language of Hofstadter, these are self-referential statements, although Nagel and Newman don’t use this term.

Using this approach, Gödel was able to prove that there is no absolute proof of consistency. At best, the system can say about itself that it is either incomplete or inconsistent. If the system is incomplete, there are true statements that are not part of the axioms and that cannot be derived from them. Enlarging the set of axioms to include them doesn’t work since they presence begets new unprovable truths. If the system is inconsistent, then everything is ‘true’ as discussed above.

Nagel and Newman leave the reader with come final thoughts that are worth contemplation. On the hope that Hilbert’s program can be successfully completed they have this to say

They also comment on the possibility of proving consistency from an outside-looking-in approach using meta-mathematical techniques when they say

Finally, they have this to say about artificial intelligence (although that term wasn’t in vogue at the time they published

And there you have it. A whirlwind tour of Gödel’s theorem with a surprise appearance of the philosophy from antiquity and the ideas about artificial intelligence.