Proposition Calculus – Part 3: Hunt the Wumpus

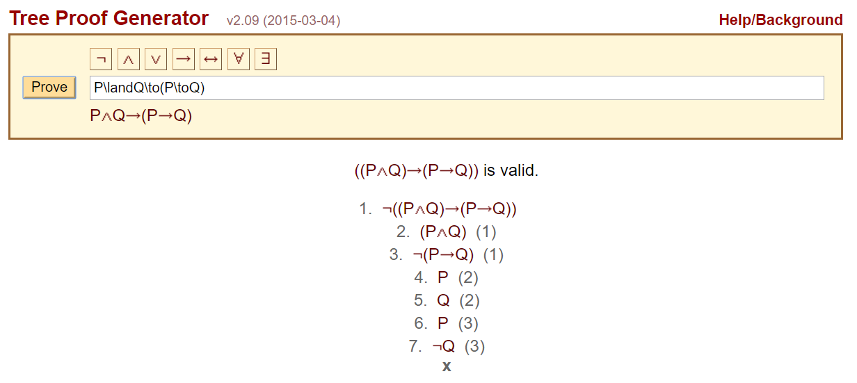

This post completes a brief three-part study of propositional calculus. In the first post, the basic notions, symbols and rules for this logical system were introduced. The second post dealt with how to prove the validity of an argument. In this context, an argument is a formal manipulation of initial assumptions into a final conclusion using the 10 inference rules. Proofs are highly algorithmic, although usually tricky, and computer programs exist that can workout proofs and determine validity. This post will be devoted to discussion some of the applications of such a decision/inference engine.

The idea of an inference engine making decisions akin to what a human would do is the core notion of an expert system. Expert systems try to encapsulate the problem-solving or decision-making techniques of human experts through the interaction of the inference engine with the knowledge base. Rather than relying on a defined procedural algorithm for moving data back-and-forth, the expert system operators on a set of initial premises using its inference engine to link these premises to facts in its knowledge base. These linked facts then acts as the new premises and the chain continues until the goal is met or the system declares that it has had enough.

There are two different approaches to building the inferential links: forward-chaining and backward chaining.

Forward-chaining works much like syllogistic logic starting from the premises and working forward to the conclusion. The example in Wikipedia is particularly nice in that it demonstrates the method using, perhaps, the most famous syllogism in logic. The knowledge base would have a rule reading something like:

\[ Man(x) \implies Mortal(x) \]

This rule means that anytime the object $x$ is identified as belonging to the set $Man$ it can be inferred that the same object belongs to the set $Mortal$. The inference engine, when given

\[ x = Socrates \; \]

immediately can determine that Socrates is mortal, thus rediscovering in cyberspace the classic syllogism

Socrates is a man

Therefore Socrates is mortal

Backwards-chaining starts with the conclusion and tries to find a path that gets it all the way back to the beginning. Backwards-chaining is often used, to comedic effect, in certain stories in which in order to get something the lead character wants (say Z) he must first acquire Y. But acquisition of Y requires first getting X and so on. Of course, the humor comes when the chain is almost complete only to break apart, link-by-link, at the last moment.

In both cases, the inference rule that is primarily employed is the Modus Ponens which asserts

\[ P \rightarrow Q, P \implies Q \; . \]

It is easy to see that the rise of the expert system explains the importance of propositional calculus.

Now the formal instance of Modus Ponens given above is deceptively simple-looking but can be quite complex when applied to real-world problems. As a result, the actual track record of expert systems is mixed. Some applications have failed (e.g. Hearsay for speech-recognition) whereas other applications have been quite successful (e.g. MYCIN or CADUCEUS for medical diagnoses).

Expert systems were quite in fashion in the late 1980s and early 1990s but their usage has receded from view. It appears that nowadays, they tend to find a place in and amongst other AI tools rather than being standalone systems.

Despite their relegated position in modern AI, expert systems and propositional calculus are still investigated today. One very fun and challenging application is to produce an agent that is capable of playing the game Hunt the Wumpus.

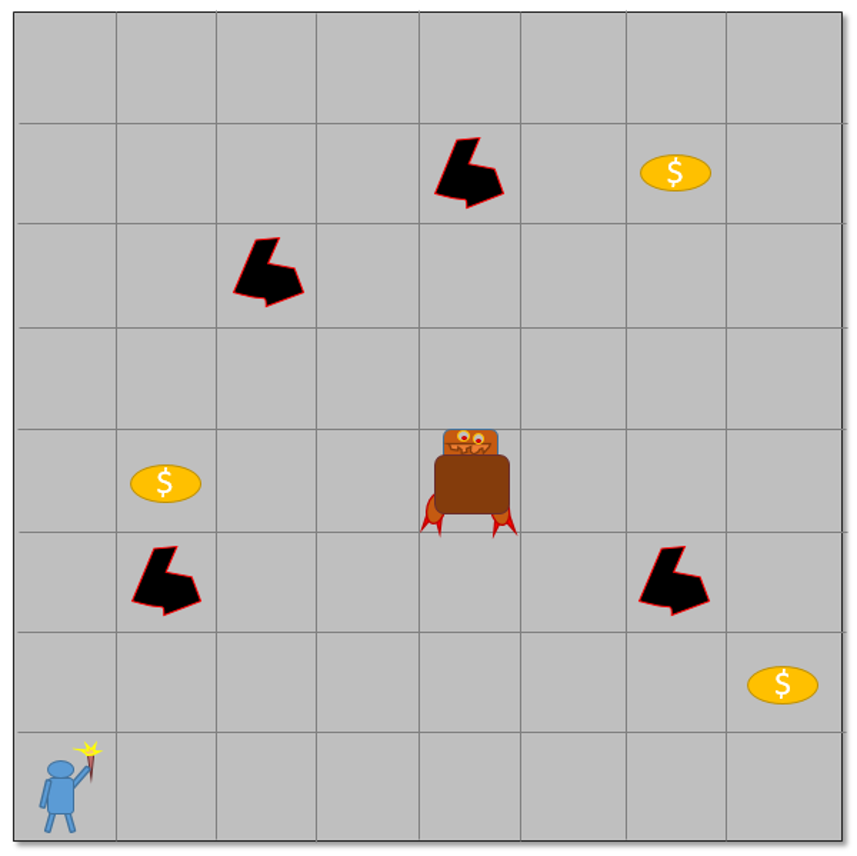

In Hunt the Wumpus, the player finds himself trapped in a dungeon. He can only see the contents of his room and the exits that issue forth. Hidden in the dungeon are pits that will plunge him to a painful death and a Wumpus, a bizarre beast, that will eat the player. Both of these threats are certain doom if the player enters the room in which they are contained. Also hidden in the dungeon is a pile of gold. The player’s goal is to find the gold and kill the Wumpus, thus allowing the player to escape with the riches.

In order for the player to navigate the dungeon, he must depend on his senses of smell and touch. When he is in a room adjacent to a pit, he senses a slight breeze blowing but he cannot tell from where. When he is in a room that is within two of the Wumpus he smells a stench that alerts him that the Wumpus is near. Armed with only one arrow, the player must use his perceptions to deduce where the Wumpus is and, firing from the safety of the adjacent room, must kill the foul beast. Note that this is a variant of the original game – as far as I can tell, all versions are variants and everyone who implements the game tinkers to some minor degree with the game play.

As the player moves around the board, he increases his knowledge base and using propositional calculus, he attempts to win the gold, kill the Wumpus, and escape to tell his tell.

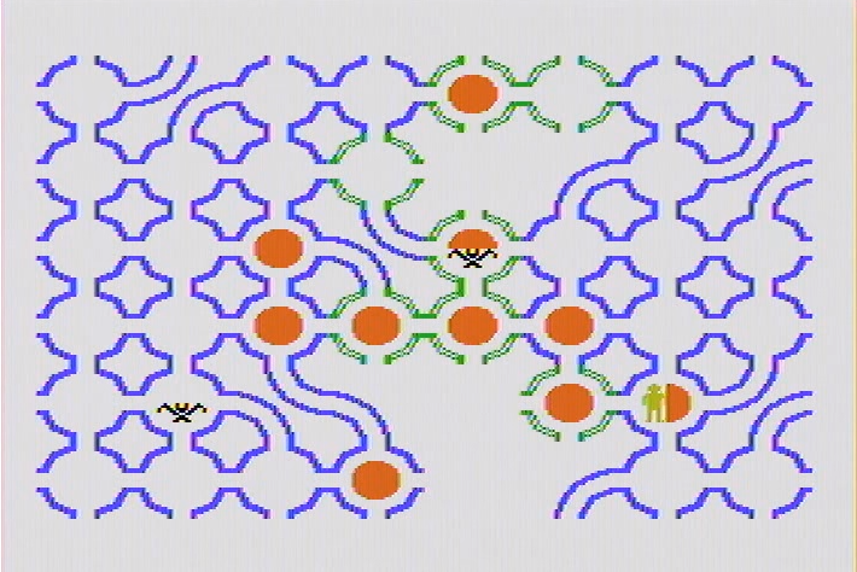

A nice example of the game play available on the TI-99/4A can be found on YouTube. In the following example, the human player has revealed a large percentage of the board.

The green colors to the rooms denote the ‘breeze observation’ while the rust color denotes the ‘stench observation’. In this version, there are also bats that, when triggered, transport the player to a random location. These bats, however, rarely seem to activiate and I’ll ignore them.

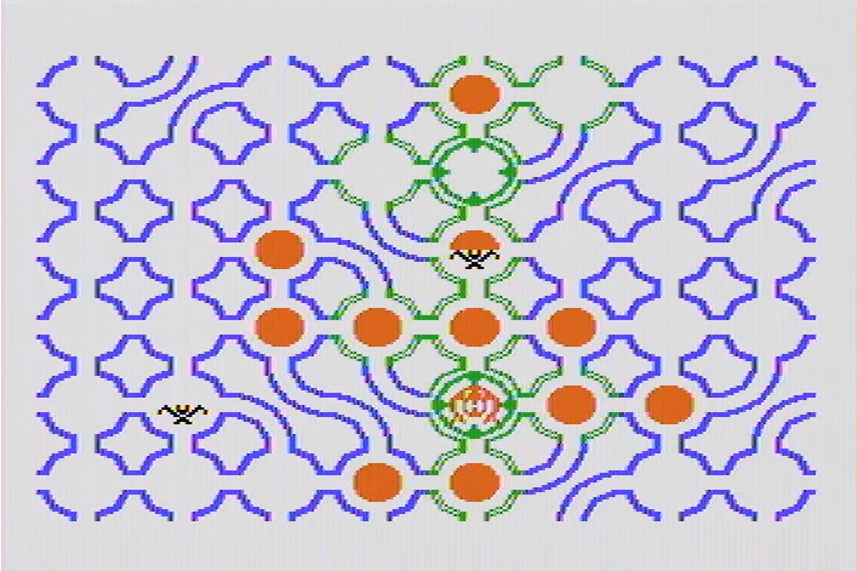

With a little thought, it is possible to see that there is a pit along the 2nd row from the top and the 5th column from the left. Likewise, the Wumpus in the 6th row and 5th column. The player recognized this and shot appropriately, killing the Wumpus and saving his skin. The following figure shows the game board revealed after th success.

This sort of deduction is relatively easy for the human player once a bit of experience is gained but not so much for the computer agent. As the video below demonstrates, even a relatively simple configuration (without bats and variable topology of the rooms) can be quite challenging.

And so I’ll close with a few final remarks. First, the propositional calculus is a subject alive and well in the AI community. It provides an underpinning for many decisions made by computer agents. Second, the skill of such systems has yet to rival human thought. Maybe it never will… time (and, perhaps the Wumpus) will tell.