The Axiom of Choice

I was a graduate student when I first heard of the Axiom of Choice. The mention, by one of the faculty, was in passing and, since it didn’t seem to impact my particular field and my desire to graduate was high, I didn’t pursue exactly what is was. All that I was left with was a lasting impression of the awe (or was it frustration or, perhaps, disgust), for lack of a better term, that I heard in his voice. That impression has stayed with me for years and I thought that this month’s column should be devoted to an introduction to the Axiom of Choice.

Years later, I am in a better position to understand the mix of emotions that came from that faculty member when it came to the Axiom of Choice. Said simply, the Axiom of Choice may very well be the most controversial of topics in modern mathematics.

The Axiom of Choice is a part of axiomatic set theory, the branch of mathematics that studies sets and attempts to distill their essential properties, from the most mundane finite sets, to the more difficult but commonly used infinite sets like the number line, and beyond (transfinite sets).

Axiomatic set theory grew out of the desire to eliminate the paradoxes of naive set theory, the most famous example being Russell’s paradox. Towards that goal, several axiomatic systems (or models) cropped up, The most commonly used system is the Zermelo-Fraenkel model with the Axiom of Choice thrown in (denoted by ZFC).

The importance of the Axiom of Choice is immediately apparent in that it is the only axiom that has the pride of place (or shame) next to the names of the men who founded this system. The following table, a synthesis of the articles on the ZFC system from the Encyclopedic Dictionary of Mathematics (EDM-2) and the Wikipedia article on axiomatic set theory. The first number is taken from the EDM-2 ordering, the second is from WIkipedia; likewise for the name with the EDM-2’s nomenclature coming first and the Wikipedia name (if different) coming after.

| Axiom Name | Content |

|---|---|

| 1. (1) Axiom of Extensionality | Sets formed by the same elements are equal |

| 2. (4) Axiom of the Unordered Pair (Axiom of Pairing) | Set X exists with only elements that are made of the elements of sets A and B |

| 3. (5) Axiom of the Sum Set (Axiom of Union) | Set X exists whose elements are all the possible elements of a set A. For example the union over {{1,2},{2,3}} is {1,2,3} |

| 4. (8) Axiom of the Power Set | Set X exists whose elements are all the possible subsets of a set A (i.e. the power set) |

| 5. (8) Axiom of the Empty Set | There exists an empty set {}, (Note that this axiom is not included as separate axiom in the Wikipedia article nor in the PBS Infinite Series video but mentioned in passing under the Axiom 8 in the former) |

| 6. (7) Axiom of Infinity | There exists a set having infinitely many members (e.g. containing all the natural numbers; 0 = {}, 1 = {0} = {{}}, 2 = {0,1} = {{},{{}}}, and so on) |

| 7. (3) Axiom of Separation (Axiom of Specification) | A set X can be separated into two subsets, the first obeying some condition and the second not |

| 8. (6) Axiom of Replacement | The image of a set under any definable function will also be a set |

| 9. (2) Axiom of Regularity (Axiom of Foundation) | Every non-empty set X contains a member Y such that X and Y are disjoint sets |

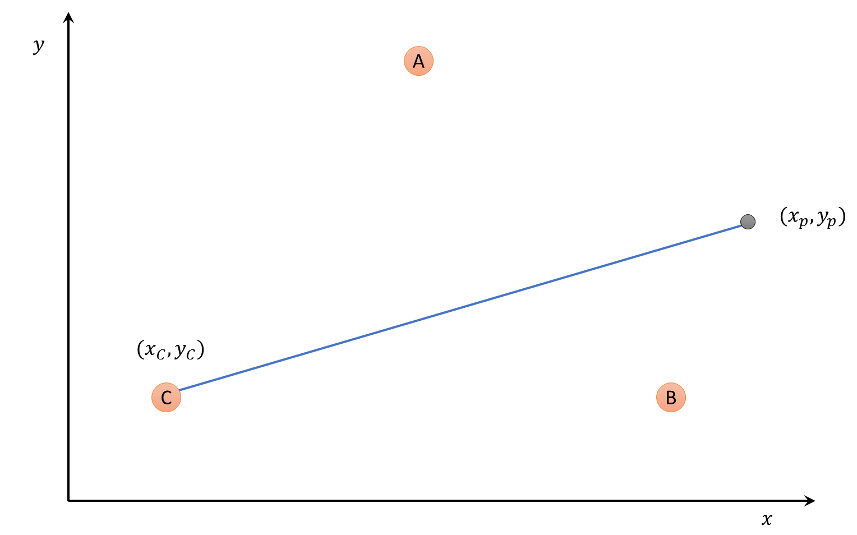

| 10. (9) Axiom of Choice (Well-ordering Theorem) | Let X be a set whose members are all non-empty. Then there exists a choice function f from X to the union of the members of X such that for all Y in X, f(Y) is in Y. |

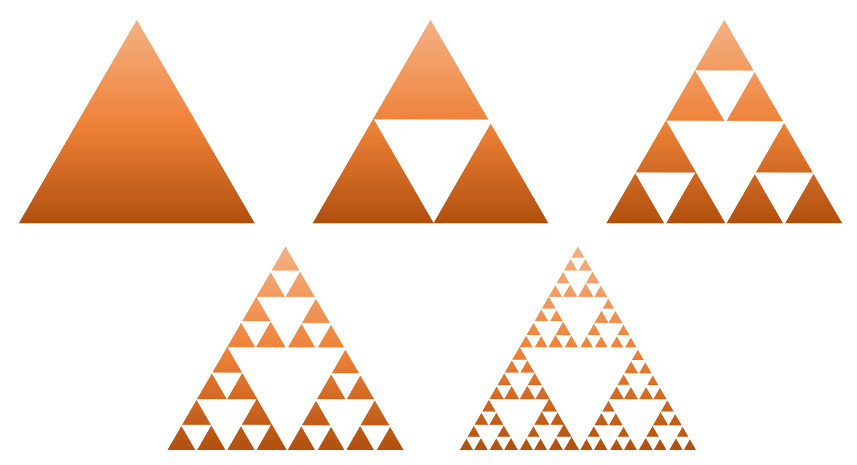

On the face of it, the Axiom of Choice may seem to be as technically innocuous as its brother axioms and, indeed, it can be deduced from the other axioms when applied to finite sets. The Axiom of Choice is distinct when dealing with infinite sets. And this is where the controversy arises.

Before discussing that point, it is worthwhile recalling that infinite sets are not strictly the parlance of the erudite mathematician. Much of physics is built on the idea of an infinite set representing a physical process (e.g. Fourier Series). And infinite sets or, more properly said, their avoidance was used in classical philosophy for all sorts of arguments (e.g. Aquinus’s arguments for the existence of God). So, the implications of the ZFC system may range further than simply a technical point on the correctness of infinite sets.

That said, the controversy surrounding the Axiom of Choice is both serious and interesting. The first controversy is that the Axiom of Choice presents is the fact that while it tells us that there is a way of selecting a member from a set it doesn’t tell us how. In other terms, the Axiom of Choice is not constructive and, so, we are left with the problem of saying that we can choose but we don’t know how the choice is made or even, sometimes, what is chosen. It is as if a miracle happened.

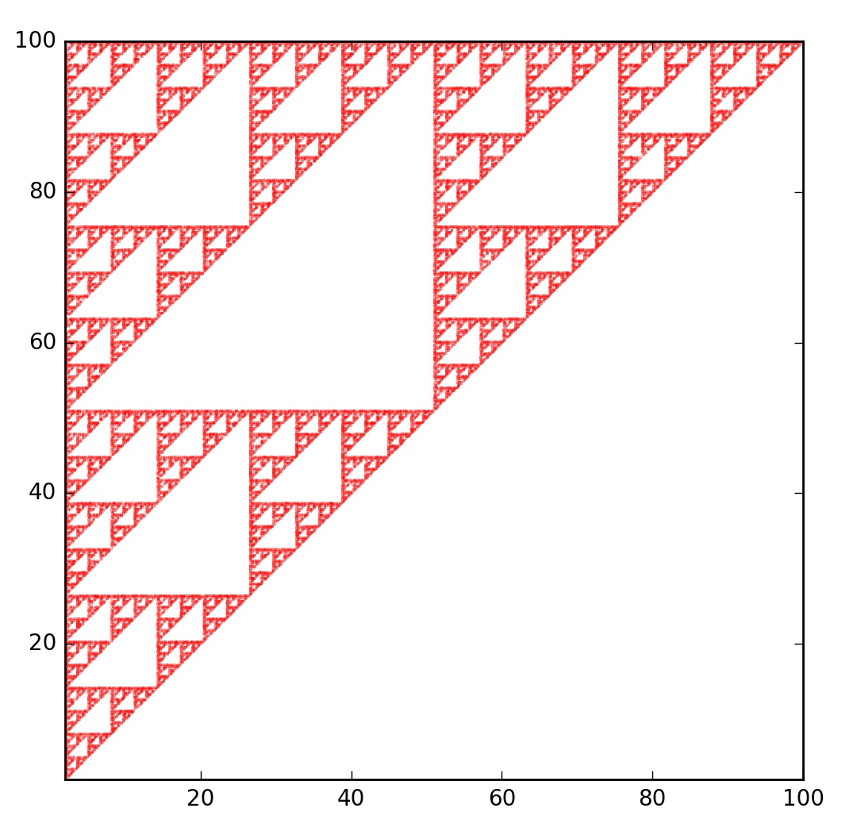

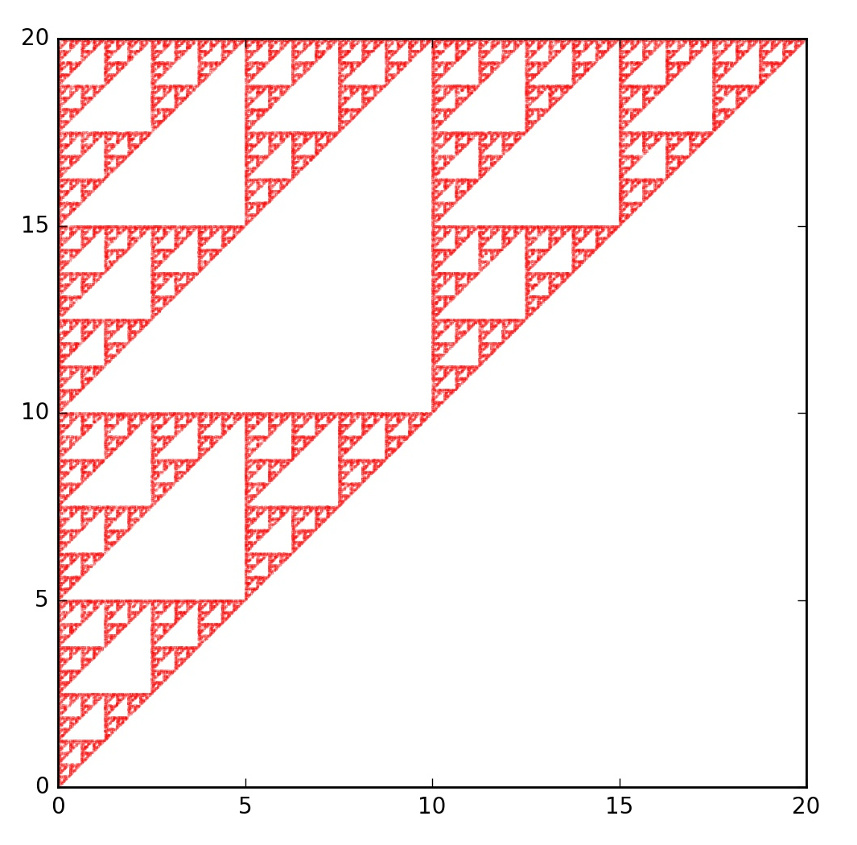

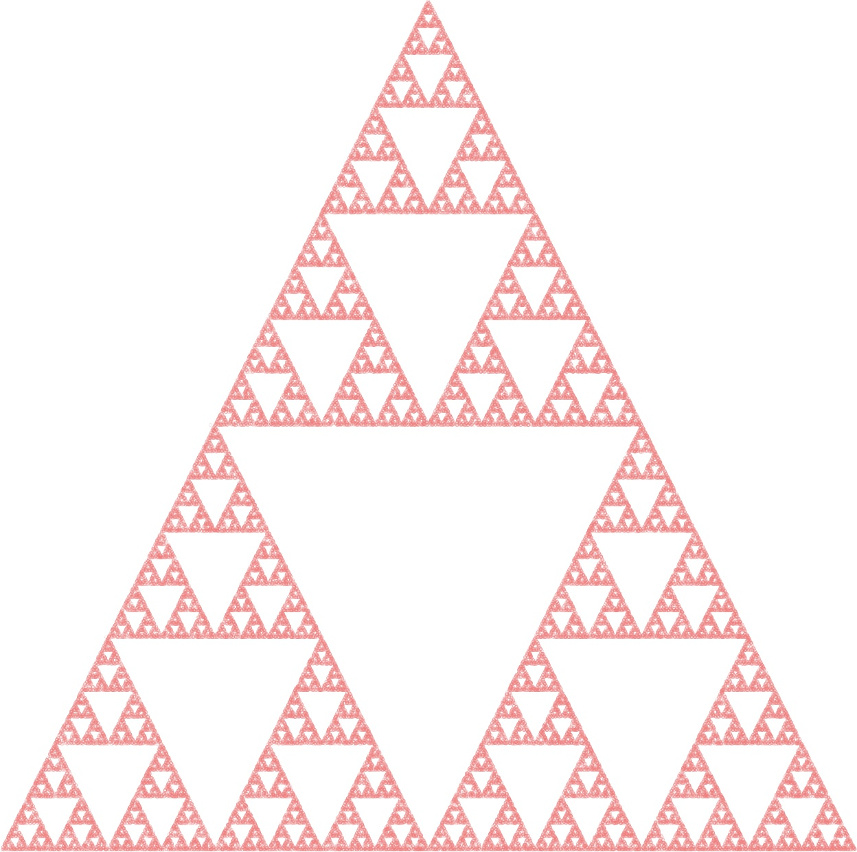

A direct consequence of this miracle, as discussed the PBS Infinite Series, is that Axiom of Choice can produce sets that are measureless from perfectly measurable sets. As shown in the video, at set S has, by one argument, a measure between 1 and 3, but, by another argument, its measure must be zero. So, S is a non-measurable set.

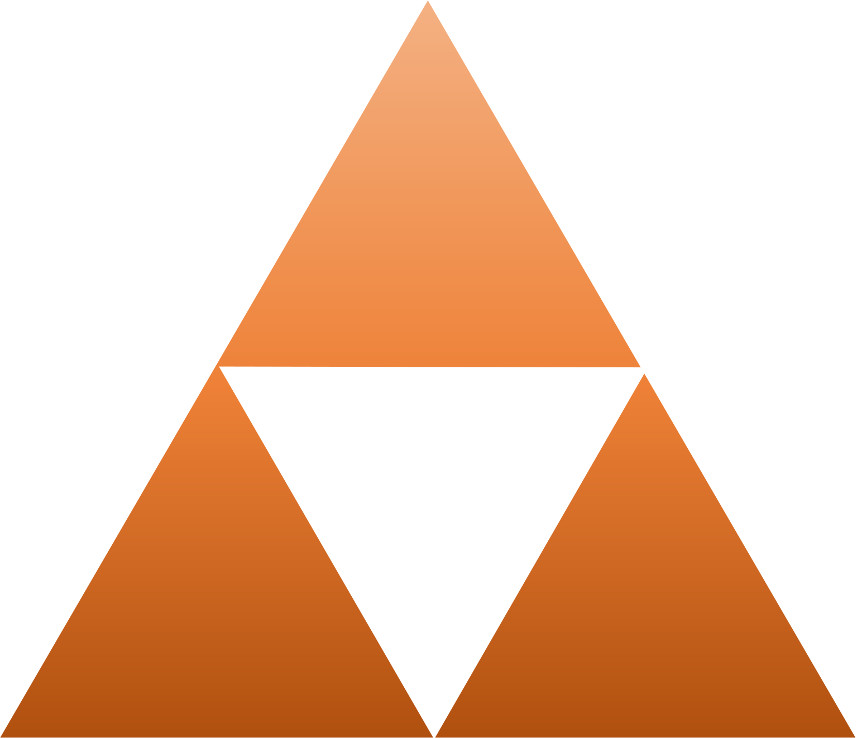

Even more disturbing is Banach-Tarski Paradox. The essence of this paradox is that a 3-dimensional ball can be taken apart into a finite number of disjoint pieces. These pieces can then be reassembled to make two balls of the same size. We are supposed to be comforted by the fact that in this process, the disjoint pieces are non-measurable sets. Details explaining the process are found in the Vsauce video linked below.

I’ll close by saying that after really thinking about the Axiom of Choice and all of the controversy that comes in its wake, I now have a better idea about the mix of feelings of that academic mentioned at the beginning. I also must confess a deep concern with mathematical logic applied to infinite sets. Unfortunately, that concern seems to have no immediate mitigation, which means researchers and, for that matter, the whole human race still has a long way to go in understanding infinity.